System design

distributed reference counting

Ray implements a distributed reference counting protocol to ensure memory safety and provides various options to recover from failures.

distributed memory

distributed memory

distributed spillbakc scheduler

Since a Ray user thinks about expressing their computation in terms of resources instead of machines, Ray applications can transparently scale from a laptop to a cluster without any code changes. Ray's distributed spillback scheduler and object manager are designed to enable this seamless scaling, with low overheads.

object manager

object manager

Plasma object store

Ownership

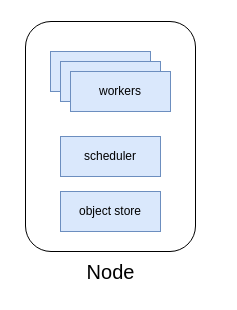

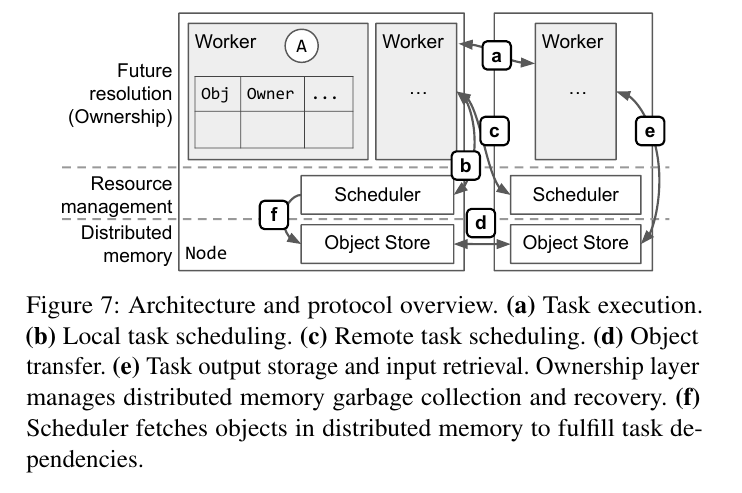

Each node in the cluster hosts one to many workers (usually

one per core), one scheduler, and one object store.

These processes implement future resolution, resource man-

agement, and distributed memory, respectively. Each node

and worker process is assigned a unique ID.

Each node in the cluster hosts one to many workers (usually

one per core), one scheduler, and one object store.

These processes implement future resolution, resource man-

agement, and distributed memory, respectively. Each node

and worker process is assigned a unique ID.

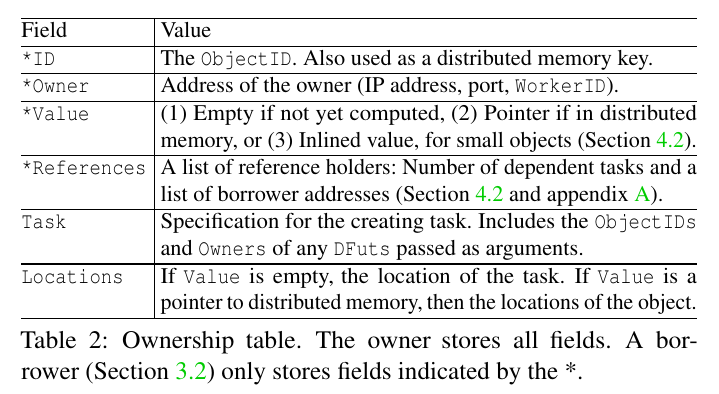

- TaskID: a hash of the parent task’s ID and the number of tasks invoked by the parent task so far. The root TaskID is assigned randomly

- ObjectID: concatenates the TaskID and the object’s index

- DFut: a tuple of the ObjectID and the owner’s address (Owner).

- ARef(an actor reference): a tuple of the ID and Owner

An actor is a stateful task that can be invoked multiple times. Like objects, an actor is created through task invocation and owned by the caller. The ownership table is also used to locate and manage actors: the Location is the actor’s address.

The difference between actor and task ? Actor is a stateful task (maybe a class), we can execute a serial related task in the same node without re-schedule.

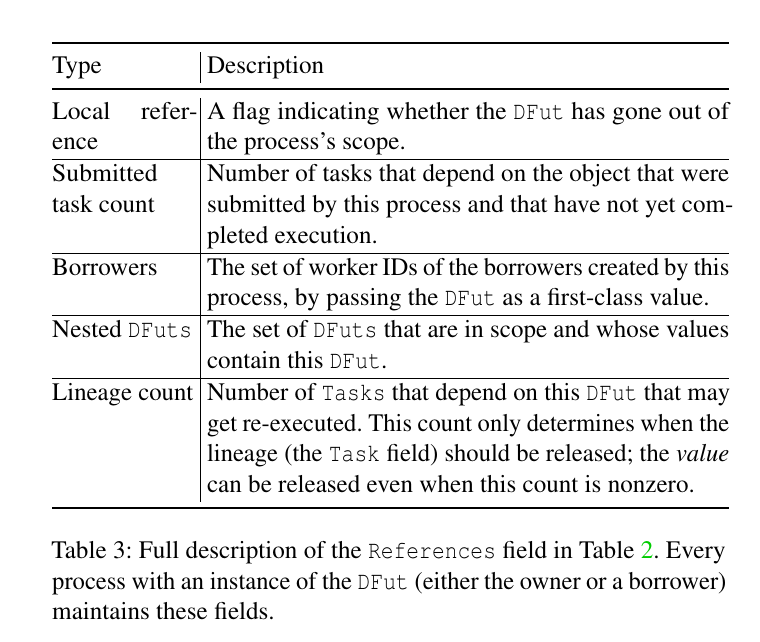

The worker stores one record per future.

A worker requests resources from the scheduling layer to

determine task placement. We assume a decentralized scheduler for scalability: each scheduler manages

local resources, can serve requests from remote workers, and can redirect a worker to a remote scheduler.

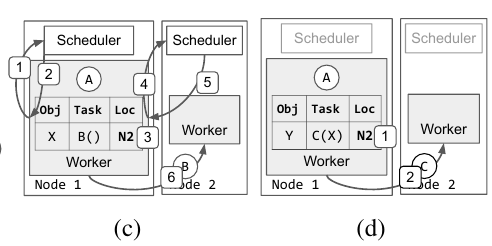

(c) Scheduling with ownership. (1-2) Local scheduler redirects owner to node 2. (3) Update task location. (4-5) Remote scheduler grants

worker lease. (6) Task dispatch. (d) Direct scheduling by the owner, using the worker and resources leased from node 2 in (c).

(c) Scheduling with ownership. (1-2) Local scheduler redirects owner to node 2. (3) Update task location. (4-5) Remote scheduler grants

worker lease. (6) Task dispatch. (d) Direct scheduling by the owner, using the worker and resources leased from node 2 in (c).

distributed reference counting